Yes you are right, the theoretical max bandwidth is 60MB/s although due to some protocol overheads the practical max bandwidth is less than that. Your calculations would be right if the camera transmitted its image in 32bit RGB format. Of course this is not the case. There are various color formats used in modern image capture and display devices. Besides raw RGB that you described, one of the popular ones is YUV. This colorspace is interesting since it has been used in TVs for decades. The interesting thing it that Y component carries the luminance (greayscale) while the U and V carry the chrominance (color) information. Because of this separation the color components could be subsampled with very small visual impact on the image. Hence, through some more processing we have YUYV color format that PS3Eye camera support. This format is good because it takes only two bytes per pixel. So the bandwidth needed to support 640x480 @ 60fps is:

640 * 480 * 16(bits per pixel) * 60(fps) = 294912000(bits per second) or approx 281(Mb per second)

This is still high for USB2.0 but practically doable. Now you wonder how do we get 75fps is this is pushing the USB2.0 protocol so much.

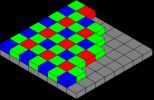

Fortunately there is another format and that is the native sensor data format. The main difference between color and monochrome CMOS sensor is that the color one has a special color filter array applied on top of the sensor pixels. This filters the light so that appropriate pixels of the sensor receive appropriate color information. Contrary to the popular believe the color sensor does not have any more physical pixels than monochrome one. Meaning, the color CMOS sensor in PS3Eye has total of 640x480 pixels each of which carry different color information according to the Bayer filter mosaic pattern (see attached image). Because of this, if we were to transmit this raw sensor data, the bandwidth requirement for 640x480 @ 60fps would be:

640 * 480 * 8(bits per pixel) * 60(fps) = 147456000(bits per second) or approx 140(Mb per second)

This is one of the main reasons why I chose to use in CL-Eye Driver since not only reduces overall data transfers but allows for more cameras to be used on the USB2.0 bus. You can examine raw sensor image data using the CL-Eye Platform SDK API and selecting the CLEYE_BAYER_RAW camera color mode in CLEyeCreateCamera function. In order to get full 32bit RGB color information from the image obtained this way, you would have to do some additional computation. Fortunately, this is all handled by the driver when using other CLEYE_MONO and CLEYE_COLOR modes.

AlexP

Click thumbnail to see full-size image